Aesthetic Color Palettes with Qt and OpenCV in C++

The VFD Collective Updates, Visit us on Etsy

Stopwatch for Fluorescence: Firmware Update Version 2.3!

Simple Object Oriented Code in Plain C

We used C++ to learn all the essentials and beauties of OOP. The language supplies us with tools to create classes, methods and everything that comes with it, like inheritance and polymorphism. Based on our knowledge of structs in C we’ve developed an understanding of how classes work. But can we write object oriented code in plain C?

Fall #Coffeegram Moments

A brief history of Character Encoding + Printing Emojis using Terminal

This article is part of the series of educational articles for ‘Einführung in die Informatik’. Dieser Artikel ist Teil des Zusatzmaterials zu den Tutorien ‘Einführung in die Informatik’ C/C++.

The ASCII Character Encoding

Until now, we have always been working with a very limited set of characters when creating our programs in C. The ASCII encoding (American Standard Code for Information Interchange) consists of 128 characters in total with 95 of them printable and 33 non-printable. We’re familiar with printable characters, such as ‘a’ or ‘9’ or ‘%’. The unprintable counterpart, e.g. a numeric 7 made your computer beep. In general, we know that every character is mapped to a 7 bit number between 0 and 127, fitting into the data type char with even one spare 8th bit. Which is why we can easily create C strings which is just a sequence of numbers mapped to an ASCII character.

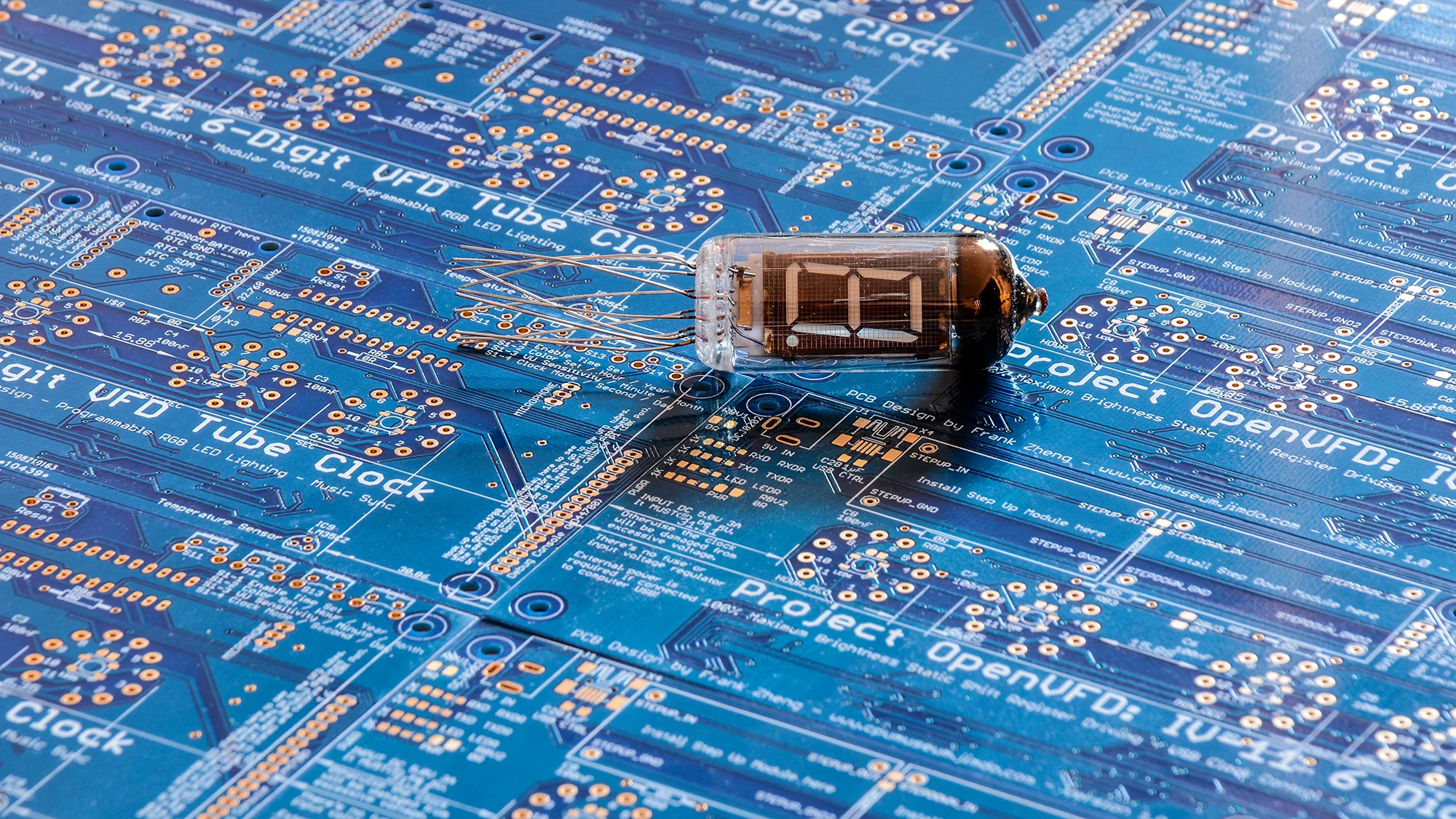

Even LCD controllers such as HD44780 (shown with LCD display left, VFD display right) work with ASCII characters

Getting the Most out of ASCII

So with a byte (char) you can represent 128 characters. That isn’t enough when we consider to include German Umlaute, ‘ä’, the e-acute ‘é’ found in e.g. the French language? Well, we can easily use the remaining 1 bit that’ll give us 128 more characters. Here you have it, the Extended ASCII characters (Find them at the bottom of the ASCII map). For many non English countries though, there are still more characters the extended ASCII doesn’t cover. That’s when in the late 80’s until the turn of the millennium the ‘8th bit space’ was once more extended by an awfully named “ISO/IEC 8859” standard with 16 different parts for different languages as of 2001 we’ll call it ISO for now. ISO-7 for instance covers the modern Greek language characters by replacing the extended ASCII map with the Greek alphabet. The different standards resulted in very uncomfortable switches between website encodings and incompatibilities for basically every non English language.

Recreating encoding ambiguity: Create a text file with ‘ß’ and save it with ISO-1 (Western ISO Latin 1) standard

‘ß’ has turned into ‘п’ in the Cyrillic (ISO-5) encoding!

Try it out yourself by creating a plain text (.txt) file with the German ‘ß’ and saving it with ISO Latin-1. Now open this in your favorite web browser and choose a different encoding, e.g. Cyrillic (ISO-5). See the ‘ß’ being mapped to another character?

Interesting approaches extending ISO 8859 included the ISO 2022 which integrated escape sequences to make switching between ISO 8859 encodings possible.

Coding it right: Unicode

What if 8 bit feels so restrictive? Exactly, we just double the amount of bits to store more characters. Hah it’s that simple! So those who felt uncomfortable with ISO worked on a more universal encoding to encode any modern world character. Unicode, designed with the first draft published in 1988 initially using 16 bit, covering pow(2, 14) = 16,384 different symbols/characters in theory.

“Unicode is intended to address the need for a workable, reliable world text encoding. Unicode could be roughly described as “wide-body ASCII” that has been stretched to 16 bits to encompass the characters of all the world’s living languages. In a properly engineered design, 16 bits per character are more than sufficient for this purpose.”

It’s important to note that the Unicode doesn’t define how characters are actually stored in disk. It maps a character to a 16 bit number, known as code point (well, as of today it isn’t limited to 16 bit any more but 1,114,112 different code points). It works in a way that the first 128 ASCII characters are preserved as a simple 7 (8) bit char, so ‘a’ is still at 97 or 0x61 in hex or U+0061 in Unicode code point. The German ‘ä’ is in the Latin-1 Supplement block, mapped to U+00E4 and a bunny emoji ‘🐰’ U+1F430.

The most commonly used implementation of the Unicode is the UTF-8 encoding. As of today (November 2018), nearly 93% of all websites use UTF-8 for text encoding. UTF-8 is absolutely genius as it stores characters in variable length from a single byte (ASCII) up to four bytes. The most significant bits of a character byte sequence tell the length of the sequence. If we have a leading zero,

// Binary representation for one byte ASCII (UTF-8) 'a' uint8_t a = 0b01100001;

we know the sequence is just one byte. Two bits of 1 followed by a 0 marks the beginning of a two byte character:

uint16_t ae = 0b 11000011 10100100; // Binary representation for 2 byte UTF-8 'ä'

What a surprise now to know that a 3 byte char starts with three ones and four byte with four ones respectively:

// Binary representation for 4 byte UTF-8 bunny: uint32_t bunny = 0b 11110000 10011111 10010000 10110000;

Please don’t do the 8 bit separation as I did here in real world C code as it won’t compile. This is just for readability here.

If our terminal application has UTF-8 encoding enabled, then it’s perfect for us now to print some special chars or emojis now. Use

printf("\xF0\x9F\x90\xB0\n");

to print a rabbit or look up any hex representation of UTF-8 characters here. This isn’t super intuitive, is it? Having to look up every hex representation for special characters we want to display. Well there is a slightly more intuitive way to enter special characters of UTF-8 format using the wide char data type (wchar_t). When set to UTF-8 you can simply enter the UTF-8 code point of a special character. Here’s the simple program I have used to print the few emojis on my terminal:

#include <stdio.h> #include <wchar.h> #include <locale.h> #define N 6 int main() { setlocale(LC_ALL, "en_US.utf-8"); // Emoji array. Look up on emojipedia! wchar_t emojis[N] = {0x1f332, 0x1f341, 0x1f4cd, 0x1f342, 0x1f698, 0x1f387}; // Print emoji array printf("This could be the description of any inspirational insta picture\n"); printf("#reasontoroam #nrthwst\n"); for(int i = 0; i < N; ++i) printf("%lc", emojis[i]); printf("\n"); }

Turns out that you can even enter emojis directly into your printf sequence. If your compiler interprets UTF-8 characters correctly, it will automatically do the hex translation for you (If you look at the assembly code file (compile with ‘gcc emoji.c -S’ generated for the C code, you can see the emojis translated into hex sequences).

#include <stdio.h> #include <wchar.h> #include <locale.h> #define N 6 int main() { // Print emojied text printf("This could be the description of any inspirational insta picture\n"); printf("#reasontoroam #nrthwst\n🌲🍁📍🍂🚘🎇\n"); }

L_.str.1: ## @.str.1 .asciz "#reasontoroam #nrthwst\n\360\237\214\262\360\237\215\201\360\237\223\215\360\237\215\202\360\237\232\230\360\237\216\207\n"

😊-Coding!

References and further reading:

Wikipedia of ASCII, ISO/IEC 8859, Unicode and UTF-8

http://www.developerknowhow.com/1091/the-history-of-character-encoding

https://www.joelonsoftware.com/2003/10/08/the-absolute-minimum-every-software-developer-absolutely-positively-must-know-about-unicode-and-character-sets-no-excuses/

http://www.polylab.dk/utf8-vs-unicode.html

Fluorescence & More: Dear Fellow Creators

Preparing Fluorescence Kits & The VFD Collective is now on GitHub!

The VFD Clock Software

Designing Your Own VFD Clock II: The Functional Circuit

Designing Your Own VFD Clock I: The Tube Circuit

This blog entry shows you what makes my VFD tube clock work and I will take you through the process of turning some tubes, parts and lines of code into a glowing tube clock. It's great and easy to follow this Instructable if you have some basic electronics knowledge already, but down't worry if not. I'll do my very best to keep everything short and simple.

The first part is about the tube and...